Claude 3 just got released, so I decided to try it out with something everyone around me is talking about; How well does AI write a program?

The entire conversation history is here if you’d like to follow along.

Can you write me Othello?

First I used the free version and just asked pointblank “I’d like to write a Python program that lets two human players play Othello. Can you help me with that?”, and it gave me a functioning CUI based Othello program:

% python othello_game.py

0 1 2 3 4 5 6 7

0

1

2

3 W B

4 B W

5

6

7

Player W's turn. Valid moves: [(2, 4), (3, 5), (4, 2), (5, 3)]

Enter your move (row col):

I then asked Claude to generate unit tests. It gave me code that looks seemingly OK, but when run, none of them passed.

Since the game is playable, I knew the problem is in tests. I first attempted to just pass back the error messages to Claude in the hope that it can fix the tests, but all it did was to give me supposedly new code that actually is identical to the old code.

At this point it was clear I needed to understand the failure and guide Claude. But as I started to look into what’s wrong, I found the code to be unreadable:

tiles = self.game.check_direction(2, 3, 2, 1, 0, 1)

self.assertEqual(tiles, [(3, 4)])

2,3,2,1,0,1?? What’s that supposed to mean!?

So I changed the gear a bit, and asked Claude to refactor this code. I had the clear idea of how I’d write this code, so I gave specific instructions along those lines.

This felt like a painful process. Claude cheerfully obliges with my suggestions, and it takes a step in the right direction, but never gets far enough. I have to do a number of back and forth to keep making minor coding corrections till it gets to the state I found decent. And each round trip takes time. Claude started to visibly slow down as the conversation got longer.

It’s like working with an eager intern. It’s lovable, and so enthusiatic, but it needs a lot of handholding, and the code it produces is so mediocre. But then, I know tons of people who can’t even code at that level, so I’m probably setting the bar too high. After all, coding is something I deeply care about.

I also made one suggestion at a time. Reflecting back, I think this was instinctive, but I wonder what happens if I make all the suggestions at once.

Somewhere in the middle of this, I run out of the free credit, so I switched to the paid version, which also gives me a better model.

After several more back and forth, I finally arrived at the code that I’m happy enough. On several occasions, I pointed out a problem, it gives me a “new” code that’s just identical to the old code. It felt like talking to a fellow programmer over a phone line, instead of looking at the same screen. It’s as if we don’t agree what we are currently looking at. Pretty clear to me the chat format is not optimal for coding, and that in turn makes me think the chat format is probably not optimal for vast number of other activities.

Can we test this?

Happy that the code is in a good state, I came back to the unit tests.

The new set of tests it wrote looks rather redundant. For example, I’d definitely define local constants for EMPTY, BLACK, and WHITE and the name Vector2D is too long for a local concept like this.

But more importantly, none of those tests passed! So the same drama unfolded. I gave it the error messages, it said it knows what’s wrong, gives me the code that’s identical to the current code…

At this point, I decided to debug the problem myself.

self.assertEqual(self.game.check_direction(Vector2D(2, 3), Player.BLACK, Othello.EAST), [Vector2D(3, 4)])

AssertionError: Lists differ: [] != [(3, 4)]

I’m pretty sure this is a bug in the test code. Checking direction from (2,3) to east wouldn’t produce (3,4). Maybe you are trying to check south from (2,3), or perhaps east from (3,2)

In response to my message, Claude sent me a lengthy response, in which it revealed the root cause of the problem. It thought the state of the game prior to the test is this:

0 1 2 3 4 5 6 7

0

1

2 . B .

3 . W B .

4 . B W

5

6

7

I have no idea where such game state came from. This is not a legal othello game state. Claude then proceeded to mumble some more, and eventually gave me tests that do pass, but by accident.

I pointed out that this is not the board state. Claude responded positively to that, like it always do, and then it proceeded to give me a whole new set of assertions, which didn’t pass.

I’m in groundhog day.

This is the point I just gave up. It’s much easier to just go edit the code myself beyond this point.

How did it do?

The set up is like a standard coding interview you do when you are hiring a new software engineer. If I think of Claude that way, it’s unlike any human engineers that I interviewed.

No human engineer would produce a functioning Othello game in one shot in 15 seconds, not even the best in the world. That part of it is super human.

It’s also clear it doesn’t really care about elegant beautiful program, and so it’s incapable of producing one. That part of it is like an average programmer. I regularly hire people who fail this bar for me and they thrive well in my companies.

Then it’s also inexplicably stupid in some areas. Number of times it was unable to dig itself back from the hole, despite many suggestions and guidance. Even a relatively junior programmer would do better. I’d definitely pass on candidates who show this level of incompetency.

Strange mix. Like I said, unlike any human engineers.

Now, if I think of it as me trying to figure out how to harness an LLM assistant to programming, not a recruiting interview, I have an entirely different take.

Given the way LLMs work, it makes sense that it struggles to pull back from earlier mistakes it makes. It’s just as much influenced by what it says as well as what I say. This makes me wonder what it’s like if I just re-edit my message as opposed to just append more messages, thereby eliminating its mistakes from the conversation backlog.

I think the way I’d use it is to go from zero to one, but then take over from there. It’s easier (though it might actually take longer) for me to go edit the code, as opposed to mentally picture how I edit it, and then find a way to communicate that to Claude.

The conversational format is also not optimal. I’d want one well integrated into IDE, so no wonder vendors are all working on it.

Let’s do it again

Now that I learned how Claude behaves a little better, I decided to do this all over again. This time I was hoping to use it better than my first time.

The entire transcript is here.

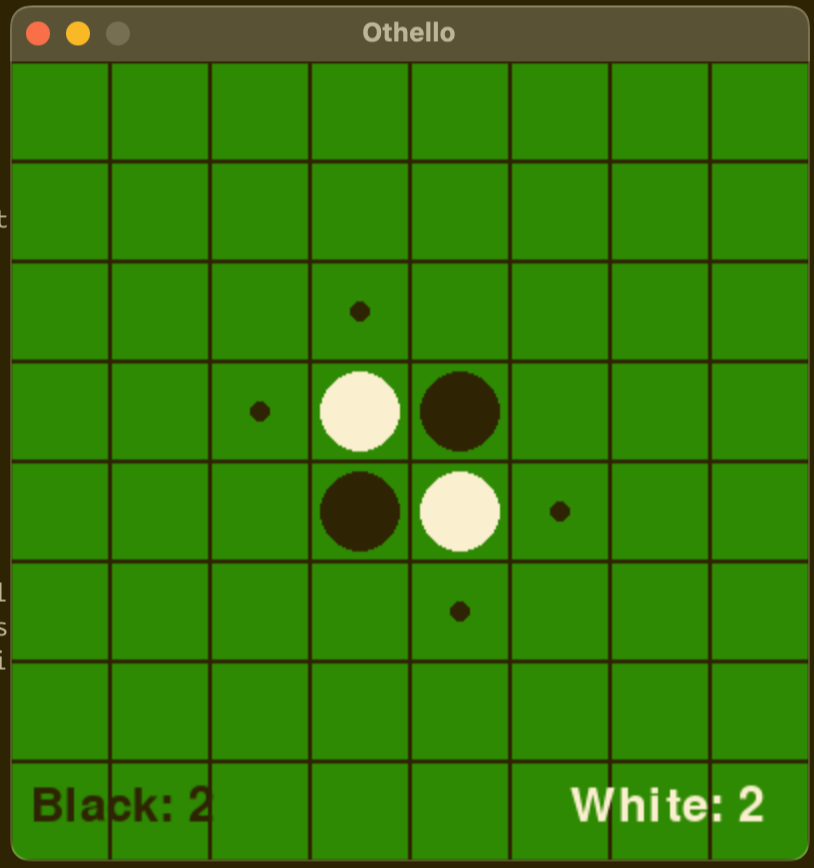

I gave a bullet point list of what I wanted. This time I also decided to do the game in GUI.

Like the first time, Claude came up with more or less fully functioning game in no time. Very impressive and super human. It would have taken me a lot more to learn the basics of pygame.

I decided not to waste time by refactoring the code to perfection.

The code is actually a little buggy, for example around how it handles the game_over flag, too.

It’s easier to fix those myself.

I asked it to generate the unit tests and this time it also gave me tests that passed in one shot. Those are not particularly well designed test cases, but it did much better than the first time for sure.

I’m feeling much better now. This is just like learning to work with a new colleague. As you get to know them, you change the way you interact with them. This is just my 2nd time with Claude, and I’m already getting along much better than the first time.